vfio-mdev

21 May 2017Introduction

VFIO-mdev, or mediated devices, is one of the coolest additions in the land of virtualization. We’ve already talked about PCI passthrough, where we take a single PCI device within its smallest isolated group on the PCI bus and assign it directly to a virtual machine. That is great except for one thing: you are still limited to 1:1 mapping of device to VM.

Now imagine being able to split a single device into multiple instances that are able to partition the hardware resources.

Do you need to have 4 teams training and evaluating neural networks in parallel on a single machine? Or 32 designers that need accelerated virtual workstation?

Or both?

That is where vfio-mdev shines. It gives you the power to do both.

Development and Design

This is the first feature that I have been part of since its early kernel development, therefore I feel inclined to speak about how the decisions early in the process impact the whole package and the experience of someone able to follow the process all the way from operating system up to your web browser.

VFIO mediated devices were merged in kernel 4.10, but even before that there was already libvirt RFC discussing the user-level interface. This approach allowed software built on top libvirt to start designing features even without the code merged.

One of the early changes to the original kernel series was designing the sysfs interface as a directory tree instead of flat, file-based hierarchy. I’m immensely grateful for this, as what we got at the lowest level is an easily understandable interface that looks like this:

$ pwd

/sys/class/mdev_bus

$ tree -l -L 5 .

├── 0000:06:00.0 -> ../../devices/pci0000:00/0000:00:03.0/0000:04:00.0/0000:05:08.0/0000:06:00.0

│ ├── mdev_supported_types

│ │ ├── nvidia-11

│ │ │ ├── available_instances

│ │ │ ├── create

│ │ │ ├── description

│ │ │ ├── device_api

│ │ │ ├── devices

│ │ │ └── name

...This single kernel patch series gives us the ability to create, list and delete mediated devices. No additional layers required, everything is accessible as a file based operation.

Great, but we need to get the mediated device instances to the QEMU somehow. This is where libvirt shines as it abstracts the complex QEMU command line into XML, where mediated device assignment can look as easy as

<devices>

<hostdev mode='subsystem' type='mdev' model='vfio-pci'>

<source>

<address uuid='c2177883-f1bb-47f0-914d-32a22e3a8804'>

</source>

</hostdev>

</devices>as added by the libvirt patch series.

These two patch series gave me everything I needed to get vfio-mdev to oVirt. Since I believe java is a plague that needs to be avoided, I’ve found a way to add the whole vfio-mdev without having to do excessive UI and backend logic changes, simply implementing it as a VDSM hook.

VDSM vfio-mdev Hook

This section includes the implementation details, feel free to skip it.

Thanks to the simple sysfs interface and even simpler libvirt XML, the hook is quite small. Given custom property mdev_type, we iterate over all the mdev-capable devices, descending into /sys/class/mdev_bus/DEVICE/mdev_supported_types directory and looking for the given type. There are two constraints though.

1) Each mdev_type is a limited resource and only certain number of these can be allocated. Luckily, that number is expressed through /sys/class/mdev_bus/DEVICE/mdev_supported_types/TYPE/available_instances. If there are no additional instances available, we have to move on to the next available device.

2) Slightly more annoying constraint is that some cards may not support heterogeneous type allocation. That means only instances of a single mdev type can be created per device. If that is the case, we first check that there are no instances of different mdev type allocated and only then create the new instance. I guess those devices have to be added manually and discovered by trial and error - that is one thing that could be improved on the kernel level.

One interesting part is the device UUID. Mediated devices are created by writing an UUID into the mdev_supported_types/TYPE/create file. Initially, I’ve used UUID version 4. That was a mistake. When ovirt-engine queries the state of the VM, it also remembers the devices we didn’t add as so called “unmanaged” devices. Since the UUID was randomly generated at each start of the VM, these (then gone) devices were piling up in the database. We therefore use custom UUID version 3 based on a arbitrary namespace (that happens to be 8524b17c-f0ca-44a5-9ce4-66fe261e5986) and VM’s name. That gives us stable device UUID per VM name.

oVirt and the Big Picture

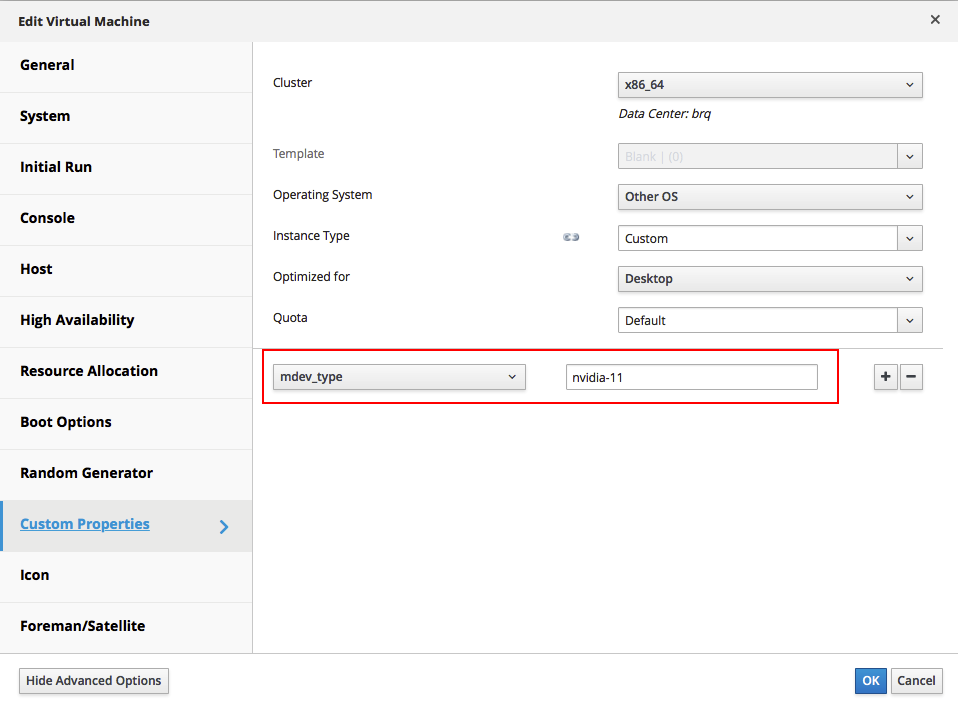

Since patches upstream and downstream, oVirt is able to create and assign mdev devices based just on the type of the device. To get up and running, all you have to do is install vdsm-mdev-vfio hook on all hosts with mdev capable devices and add the following custom property (ovirt-engine host):

$ engine-config -s "UserDefinedVMProperties=mdev_type=^.*$"After setting the custom property and pinning the VM to a host with mdev-vfio hook installed, the hook takes care of everything that is needed to create and assign the device. The pinning itself is not required, but then the VM could start on a host without card/hook installed, thus missing the mdev instance.

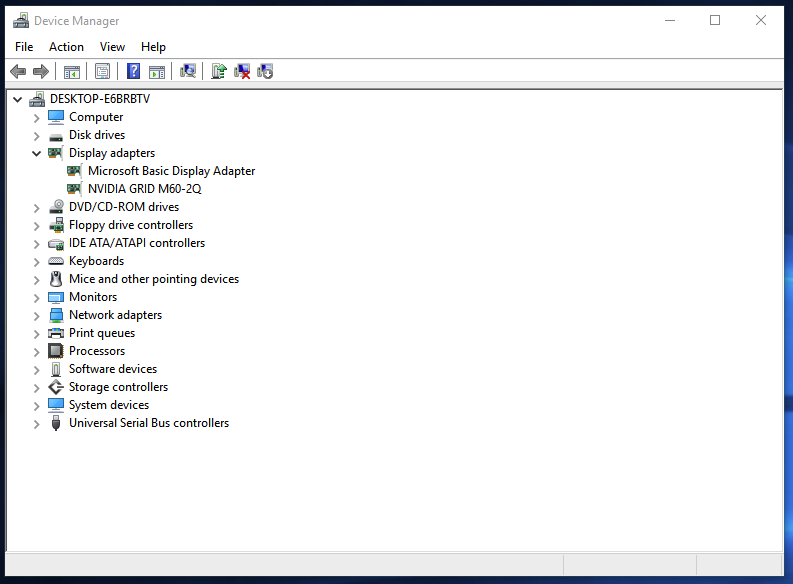

Then start the VM, wait a while and…

… we have a virtual GPU!

Future

We can create and assign an instance of mdev type, but how do I figure out which types does my card support? Not easily yet. Let’s look at the information we can read from sysfs that may be relevant:

$ tree -l -L 5 .

├── 0000:06:00.0 -> ../../devices/pci0000:00/0000:00:03.0/0000:04:00.0/0000:05:08.0/0000:06:00.0

│ ├── mdev_supported_types

│ │ ├── nvidia-11

│ │ │ ├── description

│ │ │ └── nameShould be enough right? Except that description is literally free-form, vendor created text. No format, just anything. That somehow works if you need to display all the available types and you’re SSH’d to the host:

for device in /sys/class/mdev_bus/*; do

for mdev_type in $device/mdev_supported_types/*; do

MDEV_TYPE=$(basename $mdev_type)

DESCRIPTION=$(cat $mdev_type/description)

echo "mdev_type: $MDEV_TYPE --- description: $DESCRIPTION";

done;

done | sort | uniqBut displaying this in a web browser? Not the brightest idea. Let’s hope that we can fix this in the future.

As for the vfio-mdev hook, I believe it is sufficient for the host side. The whole experience just feels incomplete though – the scheduler is not aware of mdev capabilities. That being said, the only part required for that is ovirt-engine recognizing mdev type properties and a piece of code to use that data to filter and score VMs in external scheduler.